Developing Audio & Video Player in Angular JS | sTeam-web-UI | sTeam

sTeam web interface, part of sTeam is a collaboration platform so there was the need to support media. In any collaboration platform support for basic/diverse MIME types is necessary. In angular ngVideo and ngAudio are two good packages that are helpful in building audio and video players for any angular application. So let us see how to go ahead with things while implementing media players.

Controllers and the template :

sTeam-web-UI/newUI/audio-player// the audio controller and templatesTeam-web-UI/newUI/video-player// the video controller and template

Using ngAudio

angular.module('steam', [ 'ngAudio' ])

.value("songRemember",{})

.controller('sTeamaudioResource', function($scope, ngAudio) {

$scope.audios = [

ngAudio.load('audio/test.mp3'),

]

})

.controller('sTeamaudioPlayer', ['$scope', 'ngAudio', 'songRemember', function($scope, ngAudio,

songRemember) {

var url = 'test.mp3';

if (songRemember[url]) {

$scope.audio = songRemember[url];

} else {

$scope.audio = ngAudio.load(url);

$scope.audio.volume = 0.8;

songRemember[url] = $scope.audio;

}

}]);

- First create a controller for storing the resources.Store the items in an array or an object. Note the point storing the images in an

Arrayor anObjectdepends on your use case, but make sure that you are calling the resources correctly. - Now create another controller which handles the control of the audio player.This controller should be responsible for handling the URI’s of the media.

- In the second controller which we create we must add some config to give support to controls in the front end, things like progress of the media, volume, name of the media etc. These are essentially important for the front end. So each resource loaded to the audio player must have all the above listed controls and information.

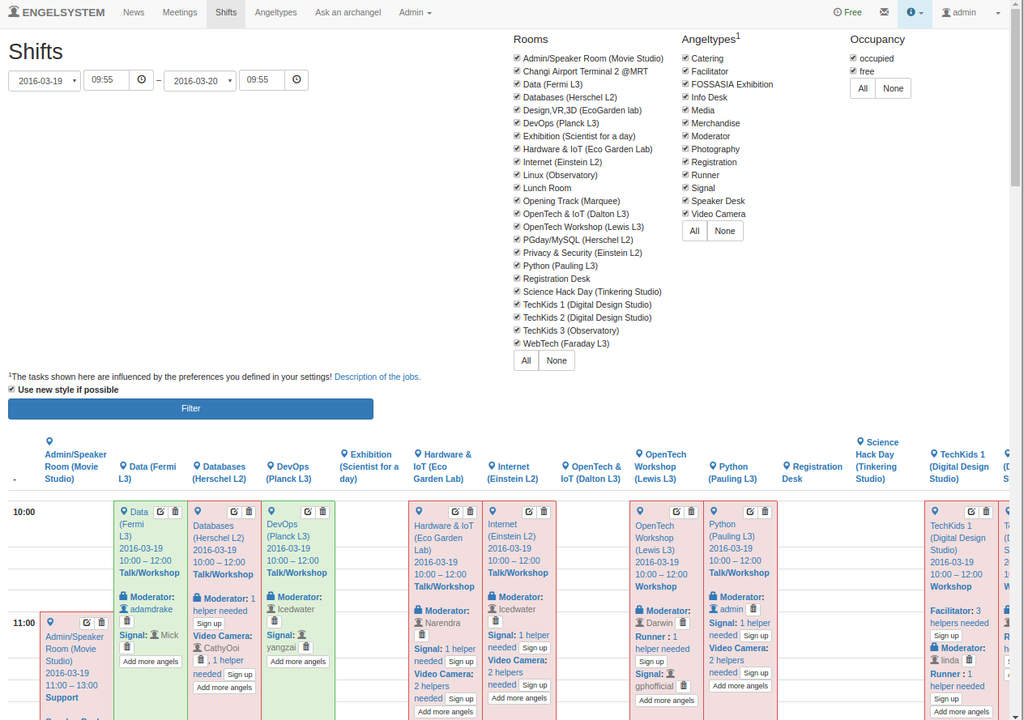

Below is the screenshot of the audio player

Using ngVideo

angular.module('steam', [ 'ngVideo' ])

.controller('sTeamvideoPlayer', ['$scope', '$timeout', 'video', function($scope, $timeout, video) {

$scope.interface = {};

$scope.playlistopen = false;

$scope.videos = {

test1: "test1.mp4",

test2: "test2.mp4"

};

$scope.$on('$videoReady', function videoReady() {

$scope.interface.options.setAutoplay(true);

});

$scope.playVideo = function playVideo(sourceUrl) {

video.addSource('mp4', sourceUrl, true);

}

$scope.getVideoName = function getVideoName(videoModel) {

switch (videoModel.src) {

case ($scope.videos.test1): return "test1";

case ($scope.videos.test2): return "test2";

default: return "Unknown Video";

}

}

video.addSource('mp4', $scope.videos.test1);

video.addSource('mp4', $scope.videos.test2);

}]);

- Developing the video player is quite the same as developing the audio player but this involves some extra configurations that are ought to be considered. Things like autoplay, giving the scope for playing the video on full screen etc. If keenly looked these are just additional configuration which you are trying to add in order to make your video player more efficient.

- Firstly we must have the

$on($videoReady)event written in order to autoplay the list of videos in the default playlist - Moving on, there are couple of controls which are to be given to the video player. Using the method getVideoName we can bind the video source to the title/name of the video.

- The

videoservice provider must be used for adding the video Sources, and it must be noted that the mp4 can be altered in order to play mp3 files or video files of different format. Before using other video format, make a note of checking the list of video files supported byngVideo.

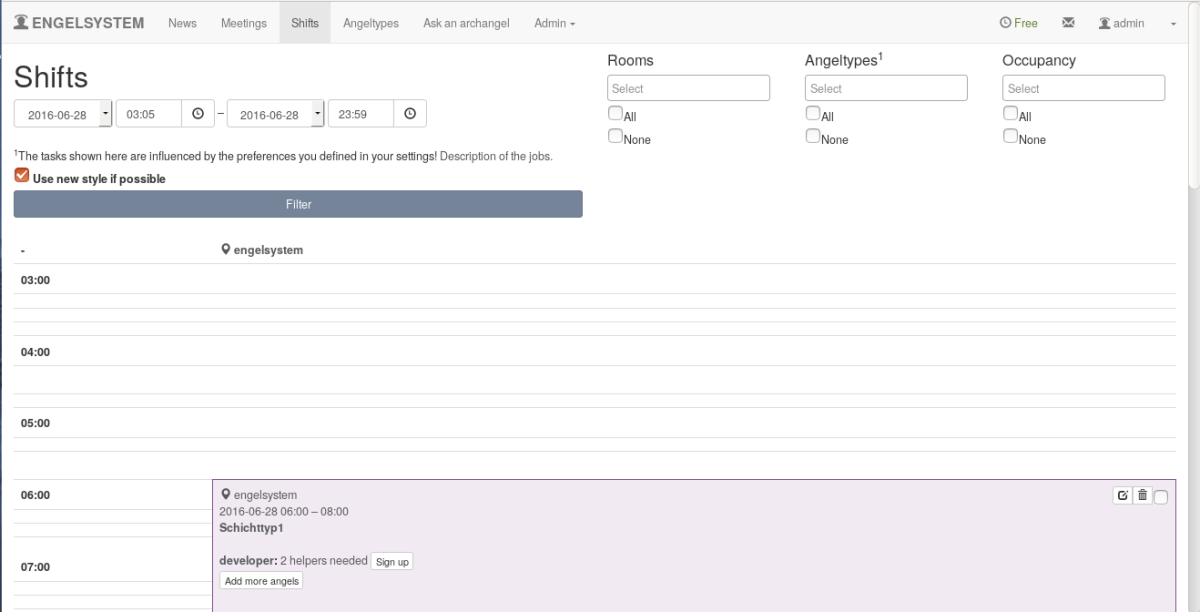

Below is the screenshot of the video player

Thats it folks,

Happy Hacking !!

You must be logged in to post a comment.