Link one repository’s subdirectory to another

Loklak, a distributed social media message search server, generates its documentation automatically using Continuous Integration. It generates the documentation in the gh-pages branch of its repository. From there, the documentation is linked and updated in the gh-pages branch of dev.loklak.org repository.

The method with which Loklak links the documentations from their respective subprojects to the dev.loklak.org repository is by using `subtrees`. Stating the git documentation,

“Subtrees allow subprojects to be included within a subdirectory of the main project, optionally including the subproject’s entire history. A subtree is just a subdirectory that can be committed to, branched, and merged along with your project in any way you want.”

What this means is that with the help of subtrees, we can link any project to our project keeping it locally in a subdirectory of our main repository. This is done in such a way that the local content of that project can easily be synced with the original project through a simple git command.

Representation:

A representation of how Loklak currently uses subtrees to link the repositories is as follows.

git clone --quiet --branch=gh-pages git@github.com/dev.loklak.org.git central-docs cd central docs git subtree pull --prefix=server https://github.com/loklak/loklak_server.git gh-pages --squash -m "Update server subtree"

git subtree split:

The problem with the way documentation is generated currently is that the method is very bloated. The markup of the documentation is first compiled in each sub-projects’s repository and then aggregated in the dev.loklak.org repository. What we intend to do now is to keep all the markup documentation in the docs folder of the respective repositories and then create subtrees from these folders to a master repository. From the master repository, we then intend to compile the markups to generate the HTML pages.

Intended Implementation:

To implement this we will be making use of subtree split command.

-

- Run the following command in the subprojects repository. It creates a subtree from the docs directory and moves it to a new branch

documentation.

- Run the following command in the subprojects repository. It creates a subtree from the docs directory and moves it to a new branch

git subtree --prefix=docs/ split -b documentation

-

- Clone the master repository. (This is the repository we intend to link the subdirectory with.)

git clone --quiet --branch=master git@github.com:loklak/dev.loklak.org.git loklak_docs cd loklak_docs

-

- Retrieve the latest content from the subproject.

- During the first run.

- Retrieve the latest content from the subproject.

git subtree add --prefix=raw/server ../loklak_server documentation --squash -m "Update server subtree"

-

-

- During following runs.

-

git subtree pull --prefix=raw/server ../loklak_server documentation --squash -m "Update server subtree"

-

- Push changes.

git push -fq origin master > /dev/null 2>&1

2. Network Inspection

2. Network Inspection

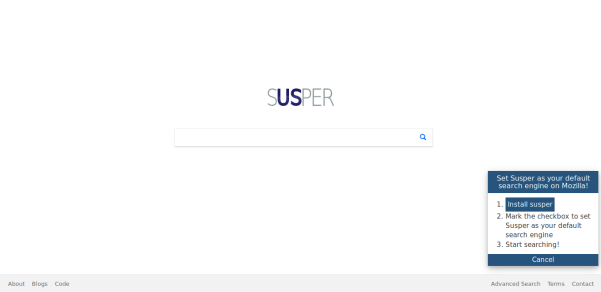

Search box on Firefox

Search box on Firefox

You must be logged in to post a comment.