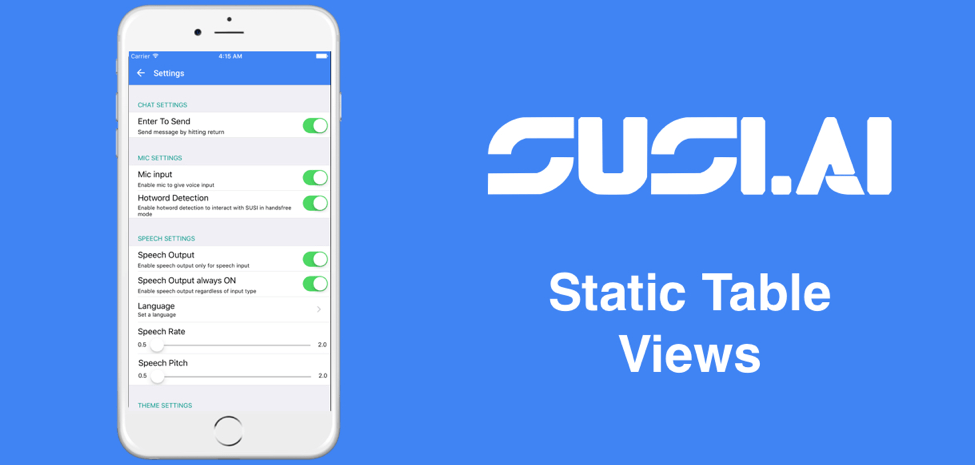

Settings Controller UI using Static Table View

Dynamic Table Views are used at places where there may be any kind of reusability of cells. This means that there would exist cells that would have the same UI elements but would differ in the content being displayed. Initially the Settings Controller was built using UICollectionViewController which is completely dynamic but later I realized that the cells will remain static every time so there is no use of dynamic cells to display the UI hence, I switched to static table view cells. Using Static Table View is very easy. In this blog, I will explain how the implementation of the same was achieved in SUSI iOS app.

Let’s start by dragging and dropping a UITableViewController into the storyboard file.

The initial configuration of the UITableView has content as Dynamic Prototypes but we need Static cells so we choose them and make the sections count to 5 to suit our need. Also, to make the UI better, we choose the style as Grouped.

Now for each section, we have the control of statically adding UI elements so, we add all the settings with their corresponding section headers and obtain the following UI.

After creating this UI, we can refer any UI element independently be it in any of the cells. So here we create references to each of the UISlider and UISwitch so that we can trigger an action whenever the value of anyone of them changes to get their present state.

To create an action, simply create a function and add `@IBAction` in front so that they can be linked with the UI elements in the storyboard and then click and drag the circle next to the function to UI element it needs to be added. After successful linking, hovering over the same circle would reveal all the UI elements which trigger that function. Below is a method with the @IBAction identifier indicating it can be linked with the UI elements in the storyboard. This method is executed whenever any slider or switch value changes, which then updates the UserDefaults value as well sends an API request to update the setting for the user on the server.

@IBAction func settingChanged(sender: AnyObject?) {

var params = [String: AnyObject]()

var key: String = ""

if let senderTag = sender?.tag {

if senderTag == 0 {

key = ControllerConstants.UserDefaultsKeys.enterToSend

} else if senderTag == 1 {

key = ControllerConstants.UserDefaultsKeys.micInput

} else if senderTag == 2 {

key = ControllerConstants.UserDefaultsKeys.hotwordEnabled

} else if senderTag == 3 {

key = ControllerConstants.UserDefaultsKeys.speechOutput

} else if senderTag == 4 {

key = ControllerConstants.UserDefaultsKeys.speechOutputAlwaysOn

} else if senderTag == 5 {

key = ControllerConstants.UserDefaultsKeys.speechRate

} else if senderTag == 6 {

key = ControllerConstants.UserDefaultsKeys.speechPitch

}

if let slider = sender as? UISlider {

UserDefaults.standard.set(slider.value, forKey: key)

} else {

UserDefaults.standard.set(!UserDefaults.standard.bool(forKey: key), forKey: key)

}

params[ControllerConstants.key] = key as AnyObject

params[ControllerConstants.value] = UserDefaults.standard.bool(forKey: key) as AnyObject

if let delegate = UIApplication.shared.delegate as? AppDelegate, let user = delegate.currentUser {

params[Client.UserKeys.AccessToken] = user.accessToken as AnyObject

params[ControllerConstants.count] = 1 as AnyObject

Client.sharedInstance.changeUserSettings(params) { (_, message) in

DispatchQueue.main.async {

self.view.makeToast(message)

}

}

}

}

}

References

- Tutorial on AppCoda by Simon: Create a Static Table View Controller using Storyboard

- Youtube Demo on using Static Table View Cells

- Tutorial on how to create static and dynamic table view cells in Swift 3

You must be logged in to post a comment.