In current pace of web technology, the quick response time and low downtime are the core goals of any project. To achieve a continuous deployment scheme the most important factor is how efficiently contributors and maintainers are able to test and deploy the code with every PR. We faced this question when we started building loklak search.

As Loklak Search is a data driven client side web app, GitHub pages is the simplest way to set it up. At FOSSASIA apps are developed by many developers working together on different features. This makes it more important to have a unified flow of control and simple integration with GitHub pages as continuous deployment pipeline.

So the broad concept of continuous deployment boils down to three basic requirements

- Automatic unit testing.

- The automatic build of the applications on the successful merge of PR and deployment on the gh-pages branch.

- Easy provision of demo links for the developers to test and share the features they are working on before the PR is actually merged.

Automatic Unit Testing

At Loklak Search we use karma unit tests. For loklak search, we get the major help from angular/cli which helps in running of unit tests. The main part of the unit testing is TravisCI which is used as the CI solution. All these things are pretty easy to set up and use.

Travis CI has a particular advantage which is the ability to run custom shell scripts at different stages of the build process, and we use this capability for our Continuous Deployment.

Automatic Builds of PR’s and Deploy on Merge

This is the main requirement of the our CD scheme, and we do so by setting up a shell script. This file is deploy.sh in the project repository root.

There are few critical sections of the deploy script. The script starts with the initialisation instructions which set up the appropriate variables and also decrypts the ssh key which travis uses for pushing the repo on gh-pages branch (we will set up this key later).

- Here we also check that we run our deploy script only when the build is for Master Branch and we do this by early exiting from the script if it is not so.

#!/bin/bash

SOURCE_BRANCH="master"

TARGET_BRANCH="gh-pages"

# Pull requests and commits to other branches shouldn't try to deploy.

if [ "$TRAVIS_PULL_REQUEST" != "false" -o "$TRAVIS_BRANCH" != "$SOURCE_BRANCH" ]; then

echo "Skipping deploy; The request or commit is not on master"

exit 0

fi

- We also store important information regarding the deploy keys which are generated manually and are encrypted using travis.

# Save some useful information

REPO=`git config remote.origin.url`

SSH_REPO=${REPO/https:\/\/github.com\//git@github.com:}

SHA=`git rev-parse --verify HEAD`

# Decryption of the deploy_key.enc

ENCRYPTED_KEY_VAR="encrypted_${ENCRYPTION_LABEL}_key"

ENCRYPTED_IV_VAR="encrypted_${ENCRYPTION_LABEL}_iv"

ENCRYPTED_KEY=${!ENCRYPTED_KEY_VAR}

ENCRYPTED_IV=${!ENCRYPTED_IV_VAR}

openssl aes-256-cbc -K $ENCRYPTED_KEY -iv $ENCRYPTED_IV -in deploy_key.enc -out deploy_key -d

chmod 600 deploy_key

eval `ssh-agent -s`

ssh-add deploy_key

- We clone our repo from GitHub and then go to the Target Branch which is gh-pages in our case.

# Cloning the repository to repo/ directory,

# Creating gh-pages branch if it doesn't exists else moving to that branch

git clone $REPO repo

cd repo

git checkout $TARGET_BRANCH || git checkout --orphan $TARGET_BRANCH

cd ..

# Setting up the username and email.

git config user.name "Travis CI"

git config user.email "$COMMIT_AUTHOR_EMAIL"

- Now we do a clean up of our directory here, we do this so that fresh build is done every time, here we protect our files which are static and are not generated by the build process. These are CNAME and 404.html

# Cleaning up the old repo's gh-pages branch except CNAME file and 404.html

find repo/* ! -name "CNAME" ! -name "404.html" -maxdepth 1 -exec rm -rf {} \; 2> /dev/null

cd repo

git add --all

git commit -m "Travis CI Clean Deploy : ${SHA}"

- After checking out to our Master Branch we do an npm install to install all our dependencies here and then do our project build. Then we move our files generated by the ng build to our gh-pages branch, and then we make a commit, to this branch.

git checkout $SOURCE_BRANCH

# Actual building and setup of current push or PR.

npm install

ng build --prod --aot

git checkout $TARGET_BRANCH

mv dist/* .

# Staging the new build for commit; and then committing the latest build

git add .

git commit --amend --no-edit --allow-empty

- Now the final step is to push our build files to gh-pages branch and as we only want to put the build there if the code has actually changed, we make sure by adding that check.

# Deploying only if the build has changed

if [ -z `git diff --name-only HEAD HEAD~1` ]; then

echo "No Changes in the Build; exiting"

exit 0

else

# There are changes in the Build; push the changes to gh-pages

echo "There are changes in the Build; pushing the changes to gh-pages"

# Actual push to gh-pages branch via Travis

git push --force $SSH_REPO $TARGET_BRANCH

fi

Now this 70 lines of code handle all our heavy lifting and automates a large part of our CD. This makes sure that no incorrect builds are entering the gh-pages branch and also enabling smoother experience for both developers and maintainers.

The important aspect of this script is ability to make sure Travis is able to push to gh-pages. This requires the proper setup of Keys, and it definitely is the trickiest part the whole setup.

- The first step is to generate the SSH key. This is done easily using terminal and ssh-keygen.

$ ssh-keygen -t rsa -b 4096 -C "your_email@example.com”

- I would recommend not using any passphrase as it will then be required by Travis and thus will be tricky to setup.

- Now, this generates the RSA public/private key pair.

- We now add this public deploy key to the settings of the repository.

- After setting up the public key on GitHub we give the private key to Travis so that Travis is able to push on GitHub.

- For doing this we use the Travis Client, this helps to encrypt the key properly and send the key and iv to the travis. Which then using these values is able to decrypt the private key.

$ travis encrypt-file deploy_key

encrypting deploy_key for domenic/travis-encrypt-file-example

storing result as deploy_key.enc

storing secure env variables for decryption

Please add the following to your build script (before_install stage in your .travis.yml, for instance):

openssl aes-256-cbc -K $encrypted_0a6446eb3ae3_key -iv $encrypted_0a6446eb3ae3_key -in super_secret.txt.enc -out super_secret.txt -d

Pro Tip: You can add it automatically by running with --add.

Make sure to add deploy_key.enc to the git repository.

Make sure not to add deploy_key to the git repository.

Commit all changes to your .travis.yml.

- Make sure to add deploy_key.enc to git repository and not to add deploy_key to git.

And after all these steps everything is done our client-side web application will deploy on every push on the master branch.

These steps are required only one time in project life cycle. At loklak search, we haven’t touched the deploy.sh since it was written, it’s a simple script but it does all the work of Continuous Deployment we want to achieve.

Generation of Demo Links and Test Deployments

This is also an essential part of the continuous agile development that developers are able to share what they have built and the maintainers to review those features and fixes. This becomes difficult in a web application as the fixes and features are more often than not visual and attaching screenshots with every PR become the hassle. If the developers are able to deploy their changes on their gh-pages and share the demo links with the PR then it’s a big win for development at a faster pace.

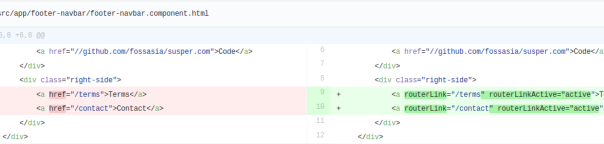

Now, this step is highly specific for Angular projects while there are are similar approaches for React and other frameworks as well, if not we can build the page easily and push our changes to gh-pages of our fork.

We use @angular/cli for building project then use angular-cli-ghpages npm package to actually push to gh-pages branch of the fork. These commands are combined and are provided as node command npm run deploy. And this makes our CD scheme complete.

Conclusion

Clearly, the continuous deployment scheme has a lot of advantages over the other methods especially in the client side web apps where there are a lot of PR’s. This essentially eliminates all the deployment hassles in a simple way that any deployment doesn’t require any manual interventions. The developers can simply concentrate on coding the application and maintainers can just simply review the PR’s by seeing the demo links and then merge when they feel like the PR is in good shape and the deployment is done all by the Shell Script without requiring the commands from a developer or a maintainer.

Links

Loklak Search GitHub Repository: https://github.com/fossasia/loklak_search

Loklak Search Application: http://loklak.net/

Loklak Search TravisCI: https://travis-ci.org/fossasia/loklak_search/

Deploy Script: https://github.com/fossasia/loklak_search/blob/master/deploy.sh

Further Resources

You must be logged in to post a comment.