Adding a Last Modified At column in Open Event Server

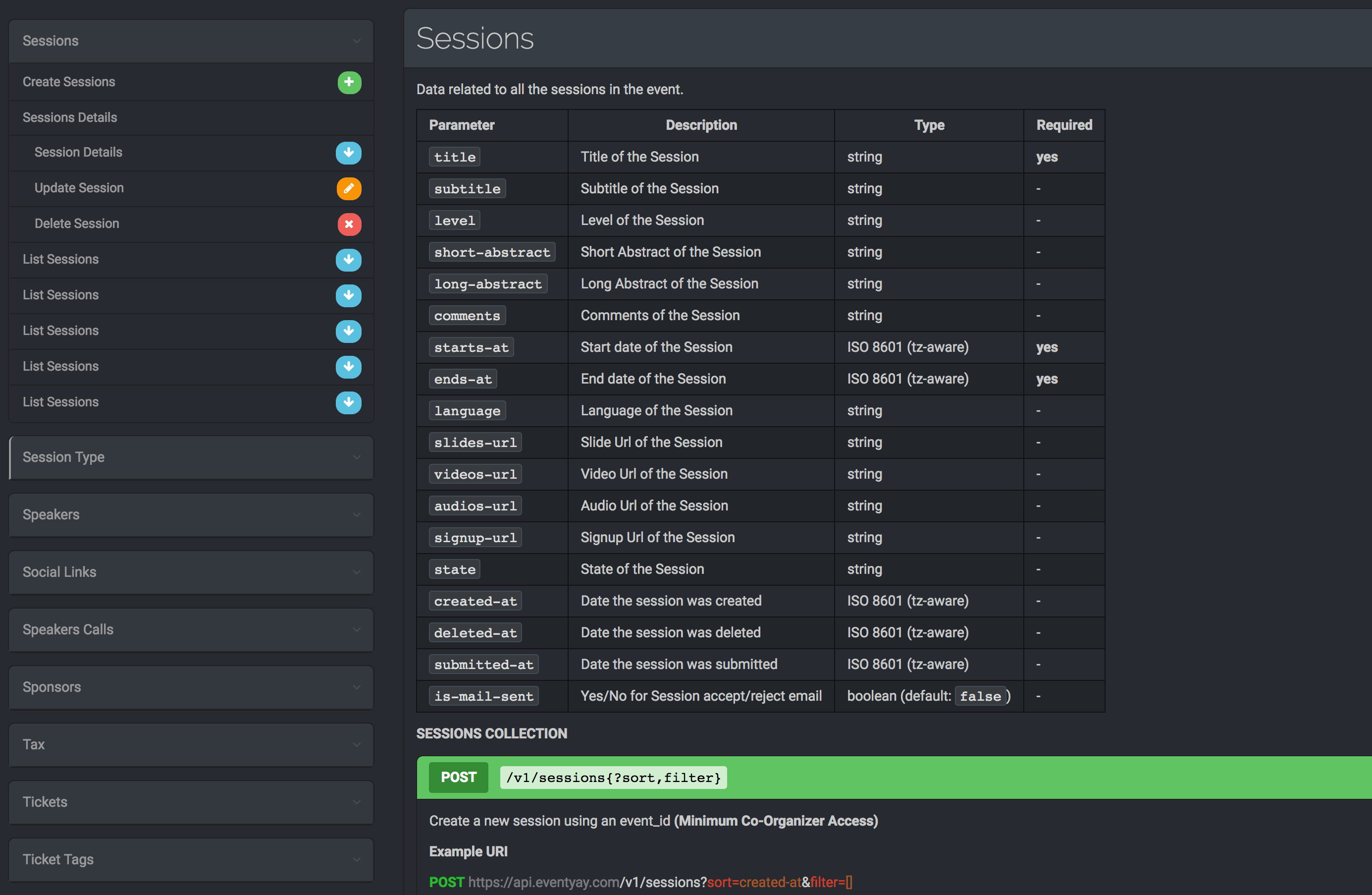

This blog article will illustrate how, with the help of SQLAlchemy, a last modified at column, with complete functionality can be added to the Open Event Server database. To illustrate the process, the blog article will discuss adding the column to the sessions api. Since last modified at is a time field, and will need to be updated each time user successfully updates the session, the logic to implement will be a slightly more complex than a mere addition of a column to the table.

The first obvious step will comprise of adding the column to the database table. To achieve the same, the column will have to be added to the model for the sessions table, as well as the schema.

In app/api/schema/sessions.py:

... class SessionSchema(Schema): """ Api schema for Session Model """ ... last_modified_at = fields.DateTime(dump_only=True) ...

And in app/models/sessions.py:

import pytz ... class Session(db.Model): """Session model class""" __tablename__ = 'sessions' __versioned__ = { 'exclude': [] } ... last_modified_at = db.Column(db.DateTime(timezone=True), default=datetime.datetime.utcnow) def init(self, ..., last_modified_at=None)) #inside init method ... self.last_modified_at = datetime.datetime.now(pytz.utc) ...

NOTE: The users for the open event organiser server will be operating in multiple time zones and hence it is important for all the times to be in sync, hence the open event database maintains all the time in UTC timezone (python’s pytz module takes care of converting user’s local time into UTC time while storing, thus unifying the timezones.) From this, it directly follows that the time needs to be timezone aware hence timezone=true is passed, while defining the column.

Next, while initialising an object of this class, the last modified time is the time of creation, and hence

datetime.now(pytz.utc) is set as the initial value which basically stores the current time in UTC timezone format.

Finally, the logic for updating the last modified at column every time any other value changes for a session record needs to be implemented. SQLAlchemy provides an inbuilt support for detecting update and insert events which have been used to achieve the goal. To quote the official SQLAlchemy Docs, “SQLAlchemy includes an event API which publishes a wide variety of hooks into the internals of both SQLAlchemy Core and ORM.”

@event.listens_for(Session, 'after_update') def receive_after_update(mapper, connection, target): target.last_modified_at = datetime.datetime.now(pytz.utc)

The listens_for() decorator is used to register the event according to the arguments passed to it. In our case, it will register any event on the Session API (sessions table), whenever it updates.

The corresponding function defined below the decorator, receive_after_update(mapper, connection, target) is then called, and session model (table) is the the registered target with the event. It sets the value of the last_modified_at to the current time in the UTC timezone as expected.

Lastly, since the changes have been made to the database schema, the migration file needs to be generated, and the database will be upgraded to alter the structure.

The sequence of steps to be followed on the CLI will be

> python manage.py db migrate > python manage.py db upgrade

Resources

- SQLAlchemy Events – Official SQLAlchemy documentation

- Session Model – Complete source code of file which defines session Model

- Session Schema – Final schema for sessions API

So if developer changes table he has to run migration!

So if developer changes table he has to run migration!

You must be logged in to post a comment.