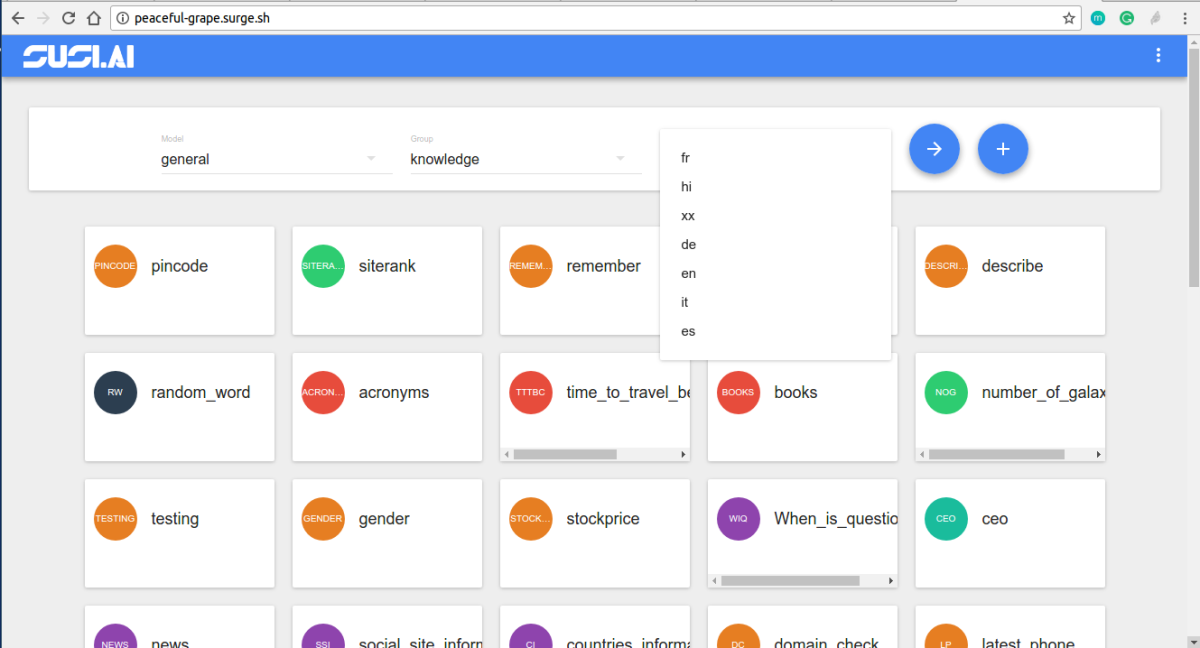

Fetching Images for RSS Responses in SUSI Web Chat

Initially, SUSI Web Chat rendered RSS action type responses like this:

The response from the server initially only contained

- Title

- Description

- Link

We needed to improvise the web search & RSS results display and also add images for the results.

The web search & RSS results are now rendered as :

How was this implemented?

SUSI AI uses Yacy to fetchRSSs feeds. Firstly the server using the console process to return the RSS feeds from Yacy needs to be configured to return images too.

"yacy":{ "example":"http://127.0.0.1:4000/susi/console.json?q=%22SELECT%20title,%20link%20FROM%20yacy%20WHERE%20query=%27java%27;%22", "url":"http://yacy.searchlab.eu/solr/select?wt=yjson&q=", "test":"java", "parser":"json", "path":"$.channels[0].items", "license":"" }

In a console process, we provide the URL needed to fetch data from, the query parameter needed to be passed to the URL and the path to look for the answer in the API response.

- url = <url> – the URL to the remote JSON service which will be used to retrieve information. It must contain a $query$ string.

- test = <parameter> – the parameter that will replace the $query$ string inside the given URL. It is required to test the service.

Here the URL used is :

http://yacy.searchlab.eu/solr/select?wt=yjson&q=QUERY

To include images in RSS action responses, we need to parse the images also from the Yacy response. For this, we need to add `image` in the selection rule while calling the console process

"process":[ { "type":"console", "expression":"SELECT title,description,link FROM yacy WHERE query='$1$';" } ]

Now the response from the server for RSS action type will also include `image` along with title, description, and link. An example response for the query `Google` :

{

"title": "Terms of Service | Google Analytics \u2013 Google",

"description": "Read Google Analytics terms of service.",

"link": "http://www.google.com/analytics/terms/",

"image": "https://www.google.com/images/branding/googlelogo/1x/googlelogo_color_116x41dp.png",

}

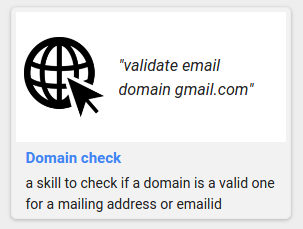

However, the results at times, do not contain images because there are none stored in the index. This may happen if the result comes from p2p transmission within Yacy where no images are transmitted. So in cases where images are not returned by the server, we use the link preview service to preview the link and fetch the image.

The endpoint for previewing the link is :

BASE_URL+'/susi/linkPreview.json?url=URL'

On the client side, we first search the response for data objects with images in API actions. And the amongst the remaining data objects in answers[0].data, we preview the link to fetch image keeping a check on the count. This needs to be performed for processing the history cognitions too.To preview the remaining links in a loop, we cannot make ajax calls directly in a loop. To handle this, nested ajax calls are made using the function previewURLForImage() where we loop through the remaining links and on the success we decrement the count and call previewURLForImage() on the next link and on error we try previewURLForImage() on the next link without decrementing the count.

success: function (rssResponse) { if(rssResponse.accepted){ respData.image = rssResponse.image; respData.descriptionShort = rssResponse.descriptionShort; receivedMessage.rssResults.push(respData); } if(receivedMessage.rssResults.length === count || j === remainingDataIndices.length - 1){ let message = ChatMessageUtils.getSUSIMessageData(receivedMessage, currentThreadID); ChatAppDispatcher.dispatch({ type: ActionTypes.CREATE_SUSI_MESSAGE, message }); } else{ j+=1; previewURLForImage(receivedMessage,currentThreadID, BASE_URL,data,count,remainingDataIndices,j); } },

And we store the results as rssResults which are used in MessageListItems to fetch the data and render. The nested calling of previewURLForImage() ends when we have the required count of results or we have finished trying all links for previewing images. We then dispatch the message to the message store. We now improvise the UI. I used Material UI Cards to display the results and for the carousel like display, react-slick.

<Card className={cardClass} key={i} onClick={() => { window.open(tile.link,'_blank') }}> {tile.image && ( <CardMedia> <img src={tile.image} alt="" className='card-img'/> </CardMedia> ) } <CardTitle title={tile.title} titleStyle={titleStyle}/> <CardText> <div className='card-text'>{cardText}</div> <div className='card-url'>{urlDomain(tile.link)}</div> </CardText> </Card>

We used the full width of the message section to display the results by not wrapping the result in message-list-item class. The entire card is hyperlinked to the link. Along with title and description, the URL info is also shown at the bottom right. To get the domain name from the link, urlDomain() function is used which makes use of the HTML anchor tag to get the domain info.

function urlDomain(data) { var a = document.createElement('a'); a.href = data; return a.hostname; }

To prevent stretching of images we use `object-fit: contain;` to make the images fit the image container and align it to the middle.

We finally have our RSS results with images and an improvised UI. The complete code can be found at SUSI WebChat Repo. Feel free to contribute

Resources

- React-Slick Carousel Display Library – https://github.com/akiran/react-slick

- React-Slick Official Examples – http://neostack.com/opensource/react-slick

- Material UI Cards – http://www.material-ui.com/#/components/card

- Mozilla Developers Documentation for Ajax – https://developer.mozilla.org/en-US/docs/AJAX/Getting_Started

You must be logged in to post a comment.