Enhancing the image can be performed by adjusting the brightness, contrast, saturation etc. of that image. In the Phimpme Android Image Application, we implemented many enhancement operations. All these image enhancement operations are performed by the native image processing functions.

An image is made up of color channels. A gray-scale image has a single channel, colored opaque image has three channels and colored image with transparency has four channels. Each color channel of an image represents a two dimensional matrix of integer values. An image of resolution 1920×1080 has 1920 elements in its row and 1080 such rows. The integer values present in the matrices will be ranging from 0 to 255. For a grayscale image there will be a single channel. So, for that image, 0 corresponds to black color and 255 corresponds to white color. By changing the value present in the matrices, the image can be modified.

The implementation of the enhancement functions in Phimpme Application are given below.

Brightness

Brightness adjustment is the easiest of the image processing functions in Phimpme. Brightness can be adjusted by increasing or decreasing the values of all elements in all color channel matrices. Its implementation is given below.

void tuneBrightness(Bitmap* bitmap, int val) {

register unsigned int i;

unsigned int length = (*bitmap).width * (*bitmap).height;

unsigned char* red = (*bitmap).red;

unsigned char* green = (*bitmap).green;

unsigned char* blue = (*bitmap).blue;

signed char bright = (signed char)(((float)(val-50)/100)*127);

for (i = length; i--; ) {

red[i] = truncate(red[i]+bright);

green[i] = truncate(green[i]+bright);

blue[i] = truncate(blue[i]+bright);

}

}

low brightness, normal, high brightness(in the order) images are shown above

For the above function, the argument val is given by the seekbar implemented in java activity. Its value ranges from 0 – 100, so a new variable is introduced to change the range of the input argument in the function. You can see that in the for loop there is function named truncate. As the name suggests it truncates the input argument’s value to accepted range. It is added to the top of the c file as below

#define truncate(x) ((x > 255) ? 255 : (x < 0) ? 0 : x)

Contrast

Contrast of an image is adjusted in Phimpme application by increasing the brightness of the brighter pixel and decreasing value of the darker pixel. This is achieved by using the following formula for the adjustment contrast in editor of phimpme application.

pixel[i] = {(259 x (C + 255))/(255 x (259 - C))} x (pixel[i] - 128)

In the above formula, C is the contrast value and pixel[i] is the value of the element in the image matrix that we are modifying for changing the contrast.

low contrast, normal, high contrast(in the order) images are shown above

So, after this formula for modifying every pixel value, the function looks like below

void tuneContrast(Bitmap* bitmap, int val) {

register unsigned int i;

unsigned int length = (*bitmap).width * (*bitmap).height;

unsigned char* red = (*bitmap).red;

unsigned char* green = (*bitmap).green;

unsigned char* blue = (*bitmap).blue;

int contrast = (int)(((float)(val-50)/100)*255);

float factor = (float)(259*(contrast + 255))/(255*(259-contrast));

for (i = length; i--; ) {

red[i] = truncate((int)(factor*(red[i]-128))+128);

green[i] = truncate((int)(factor*(green[i]-128))+128);

blue[i] = truncate((int)(factor*(blue[i]-128))+128);

}

}

Hue

The below image explains hue shift by showing what happens when shift in hue takes place over time. The image with hue 0 looks identical with image with hue 360. Hue shift is cyclic. The definition and formulae corresponding hue is found in wikipedia page here. Using that formulae and converting them back, i.e we got rgb values from hue in Phimpme application. Its implementation is shown below.

[img source:wikipedia]

void tuneHue(Bitmap* bitmap, int val) {

register unsigned int i;

unsigned int length = (*bitmap).width * (*bitmap).height;

unsigned char* red = (*bitmap).red;

unsigned char* green = (*bitmap).green;

unsigned char* blue = (*bitmap).blue;

double H = 3.6*val;

double h_cos = cos(H*PI/180);

double h_sin = sin(H*PI/180);

double r,g,b;

for (i = length; i--; ) {

r = (double)red[i]/255;

g = (double)green[i]/255;

b = (double)blue[i]/255;

red[i] = truncate((int)(255*((.299+.701*h_cos+.168*h_sin)*r + (.587-.587*h_cos+.330*h_sin)*g + (.114-.114*h_cos-.497*h_sin)*b)));

green[i] = truncate((int)(255*((.299-.299*h_cos-.328*h_sin)*r + (.587+.413*h_cos+.035*h_sin)*g + (.114-.114*h_cos+.292*h_sin)*b)));

blue[i] = truncate((int)(255*((.299-.3*h_cos+1.25*h_sin)*r + (.587-.588*h_cos-1.05*h_sin)*g + (.114+.886*h_cos-.203*h_sin)*b)));

}

}

Saturation

Saturation is the colorfulness of the image. You can see the below null saturation, unmodified and high saturated images in the respective order. The technical definition and formulae for getting the saturation value from the rgb value is given in the wikipedia page here. In Phimpme application we used those formulae to get the rgb values from the saturation value.

Its implementation is given below.

low saturation, normal, high saturation(in the order) images are shown above

void tuneSaturation(Bitmap* bitmap, int val) {

register unsigned int i;

unsigned int length = (*bitmap).width * (*bitmap).height;

unsigned char* red = (*bitmap).red;

unsigned char* green = (*bitmap).green

unsigned char* blue = (*bitmap).blue;

double sat = 2*((double)val/100);

double temp;

double r_val = 0.299, g_val = 0.587, b_val = 0.114;

double r,g,b;

for (i = length; i--; ) {

r = (double)red[i]/255;

g = (double)green[i]/255;

b = (double)blue[i]/255;

temp = sqrt( r * r * r_val +

g * g * g_val +

b * b * b_val );

red[i] = truncate((int)(255*(temp + (r - temp) * sat)));

green[i] = truncate((int)(255*(temp + (g - temp) * sat)));

blue[i] = truncate((int)(255*(temp + (b - temp) * sat)));

}

}

Temperature

If the color temperature of the image is high, i.e the image with the warm temperature will be having more reds and less blues. For a cool temperature image reds are less and blues are more. So In Phimpme Application, we implemented this simply by adjusting the brightness of the red channel matrix and blue channel matrix as we did in brightness adjustment. We didn’t modify the green channel here.

low temperature, normal, high temperature(in the order) images are shown above

void tuneTemperature(Bitmap* bitmap, int val) {

register unsigned int i;

unsigned int length = (*bitmap).width * (*bitmap).height;

unsigned char* red = (*bitmap).red;

unsigned char* green = (*bitmap).green;

unsigned char* blue = (*bitmap).blue;

int temperature = (int)1.5*(val-50);

for (i = length; i--; ) {

red[i] = truncate(red[i] + temperature);

blue[i] = truncate(blue[i] - temperature);

}

}

Tint

In Phimpme application, we adjusted the tint of an image in the same way of adjusting the temperature. But in this instead of modifying the red and blue channels, we modified the green channel of the image. An image with more tint will have a tone of magenta color and if it is decreased the image will have a greenish tone. The below shown code shows how we implemented this function in image editor of Phimpme application.

low tint, normal, high tint(in the order) images are shown above

void tuneTint(Bitmap* bitmap, int val) {

register unsigned int i;

unsigned int length = (*bitmap).width * (*bitmap).height;

unsigned char* red = (*bitmap).red;

unsigned char* green = (*bitmap).green;

unsigned char* blue = (*bitmap).blue;

int tint = (int)(1.5*(val-50));

for (i = length; i--; ) {

green[i] = truncate(green[i] - tint);

}

}

Vignette

Vignetting is the reduciton in the brightness of the image towards the edges than the center. It is applied to draw the attention of the viewer to the center of the image.

normal and vignetted images are shown above

For implementing vignette in Phimpme application, we reduced the brightness of the pixel corresponding to a radial gradient value which is generated based on the pixel’s distance from the corner and center. It’s function in Phimpme as is shown below.

double dist(int ax, int ay,int bx, int by){

return sqrt(pow((double) (ax - bx), 2) + pow((double) (ay - by), 2));

}

void tuneVignette(Bitmap* bitmap, int val) {

register unsigned int i,x,y;

unsigned int width = (*bitmap).width, height = (*bitmap).height;

unsigned int length = width * height;

unsigned char* red = (*bitmap).red;

unsigned char* green = (*bitmap).green;

unsigned char* blue = (*bitmap).blue;

double radius = 1.5-((double)val/100), power = 0.8;

double cx = (double)width/2, cy = (double)height/2;

double maxDis = radius * dist(0,0,cx,cy);

double temp,temp_s;

for (y = 0; y < height; y++){

for (x = 0; x < width; x++ ) {

temp = dist(cx, cy, x, y) / maxDis;

temp = temp * power;

temp_s = pow(cos(temp), 4);

red[x+y*width] = truncate((int)(red[x+y*width]*temp_s));

green[x+y*width] = truncate((int)(green[x+y*width]*temp_s));

blue[x+y*width] = truncate((int)(blue[x+y*width]*temp_s));

}

}

}

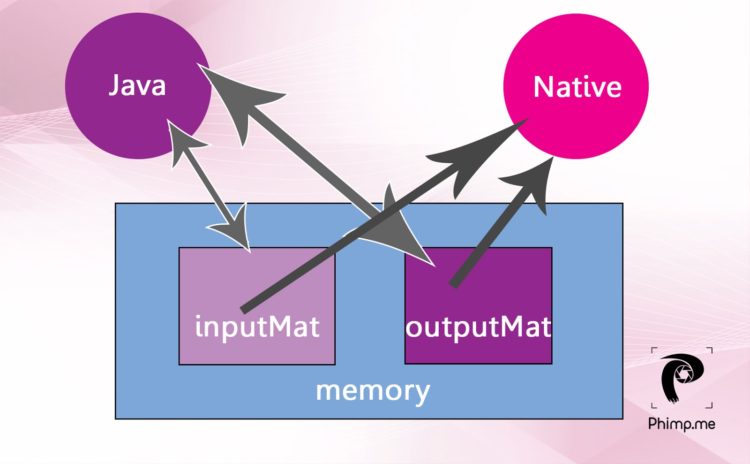

All these above mentioned functions are called from main.c file by creating JNI functions corresponding to each. These JNI functions are further defined with proper name in Java and arguments are passed to it. If you are not clear with JNI, refer my previous posts.

Resources

–

–

You must be logged in to post a comment.