Fetching data from the server each time causes a network load which makes the app depend on the server and the network in order to display data. We use an offline database to store chat messages so that we can show messages to the user even if network is not present which makes the user experience better. Realm is used as a data storage solution due to its ease of usability and also, since it’s faster and more efficient to use. So in order to save messages received from the server locally in a database in SUSI iOS, we are using Realm and the reasons for using the same are mentioned below.

The major upsides of Realm are:

- It’s absolutely free of charge,

- Fast, and easy to use.

- Unlimited use.

- Work on its own persistence engine for speed and performance

Below are the steps to install and use Realm in the iOS Client:

Installation:

- Install Cocoapods

- Run `pod repo update` in the root folder

- In your Podfile, add use_frameworks! and pod ‘RealmSwift’ to your main and test targets.

- From the command line run `pod install`

- Use the `.xcworkspace` file generated by Cocoapods in the project folder alongside `.xcodeproj` file

After installation we start by importing `Realm` in the `AppDelegate` file and start configuring Realm as below:

func initializeRealm() {

var config = Realm.Configuration(schemaVersion: 1,

migrationBlock: { _, oldSchemaVersion in

if (oldSchemaVersion < 0) {

// Nothing to do!

}

})

config.fileURL = config.fileURL?.deletingLastPathComponent().appendingPathComponent("susi.realm")

Realm.Configuration.defaultConfiguration = config

}

Next, let’s head over to creating a few models which will be used to save the data to the DB as well as help retrieving that data so that it can be easily used. Since Susi server has a number of action types, we will cover some of the action types, their model and how they are used to store and retrieve data. Below are the currently available data types, that the server supports.

enum ActionType: String {

case answer

case websearch

case rss

case table

case map

case anchor

}

Let’s start with the creation of the base model called `Message`. To make it a RealmObject, we import `RealmSwift` and inherit from `Object`

class Message: Object {

dynamic var queryDate = NSDate()

dynamic var answerDate = NSDate()

dynamic var message: String = ""

dynamic var fromUser = true

dynamic var actionType = ActionType.answer.rawValue

dynamic var answerData: AnswerAction?

dynamic var mapData: MapAction?

dynamic var anchorData: AnchorAction?

}

Let’s study these properties of the message one by one.

- `queryDate`: saves the date-time the query was made

- `answerDate`: saves the date-time the query response was received

- `message`: stores the query/message that was sent to the server

- `fromUser`: a boolean which keeps track who created the message

- `actionType`: stores the action type

- `answerData`, `rssData`, `mapData`, `anchorData` are the data objects that actually store the respective action’s data

To initialize this object, we need to create a method that takes input the data received from the server.

// saves query and answer date

if let queryDate = data[Client.ChatKeys.QueryDate] as? String,

let answerDate = data[Client.ChatKeys.AnswerDate] as? String {

message.queryDate = dateFormatter.date(from: queryDate)! as NSDate

message.answerDate = dateFormatter.date(from: answerDate)! as NSDate}if let type = action[Client.ChatKeys.ResponseType] as? String,

let data = answers[0][Client.ChatKeys.Data] as? [[String : AnyObject]] {

if type == ActionType.answer.rawValue {

message.message = action[Client.ChatKeys.Expression] as! String

message.actionType = ActionType.answer.rawValue

message.answerData = AnswerAction(action: action)

} else if type == ActionType.map.rawValue {

message.actionType = ActionType.map.rawValue

message.mapData = MapAction(action: action)

} else if type == ActionType.anchor.rawValue {

message.actionType = ActionType.anchor.rawValue

message.anchorData = AnchorAction(action: action)

message.message = message.anchorData!.text

}

}

Since, the response from the server for a particular query might contain numerous action types, we create loop inside a method to capture all those action types and save each one of them. Since, there are multiple action types thus we need a list containing all the messages created for the action types. For each action in the loop, corresponding data is saved into their specific objects.

Let’s discuss the individual action objects now.

class AnswerAction: Object {

dynamic var expression: String = ""

convenience init(action: [String : AnyObject]) {

self.init()

if let expression = action[Client.ChatKeys.Expression] as? String {

self.expression = expression

}

}

}

This is the simplest action type implementation. It contains a single property `expression` which is a string type. For initializing it, we take the action object and extract the expression key-value and save it.

if type == ActionType.answer.rawValue {

message.message = action[Client.ChatKeys.Expression] as! String

message.actionType = ActionType.answer.rawValue

// pass action object and save data in `answerData`

message.answerData = AnswerAction(action: action)

}

Above is the way an answer action is checked and data saved inside the `answerData` variable.

2) MapAction

class MapAction: Object {

dynamic var latitude: Double = 0.0

dynamic var longitude: Double = 0.0

dynamic var zoom: Int = 13

convenience init(action: [String : AnyObject]) {

self.init()

if let latitude = action[Client.ChatKeys.Latitude] as? String,

let longitude = action[Client.ChatKeys.Longitude] as? String,

let zoom = action[Client.ChatKeys.Zoom] as? String {

self.longitude = Double(longitude)!

self.latitude = Double(latitude)!

self.zoom = Int(zoom)!

}

}

}

This action implementation contains three properties, `latitude` `longitude` `zoom`. Since the server responds the values inside a string, each of them need to be converted to their respective type using force-casting. Default values are provided for each property in case some illegal value comes from the server.

3) AnchorAction

class AnchorAction: Object {

dynamic var link: String = ""

dynamic var text: String = ""

convenience init(action: [String : AnyObject]) {

self.init()if let link = action[Client.ChatKeys.Link] as? String,

let text = action[Client.ChatKeys.Text] as? String {

self.link = link

self.text = text

}

}

}

Here, the link to the openstreetmap website is saved in order to retrieve the image for displaying.

Finally, we need to call the API and create the message object and use the `write` clock of a realm instance to save it into the DB.

if success {

self.collectionView?.performBatchUpdates({

for message in messages! {

// real write block

try! self.realm.write {

self.realm.add(message)

self.messages.append(message)

let indexPath = IndexPath(item: self.messages.count - 1, section: 0)

self.collectionView?.insertItems(at: [indexPath])

}

}

}, completion: { (_) in

self.scrollToLast()

})

}

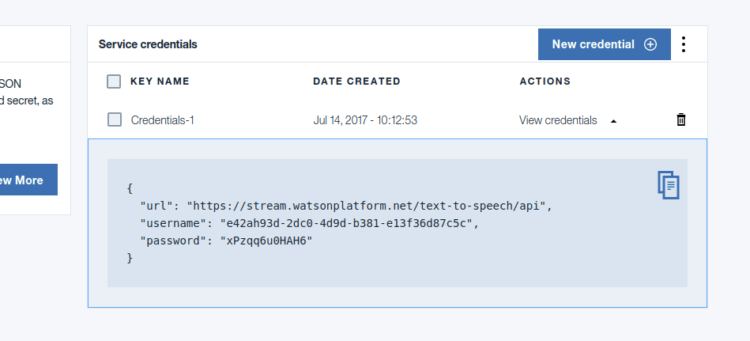

list of message items and inserted into the collection view.Below is the output of the Realm Browser which is a UI for viewing the database.

References:

You must be logged in to post a comment.