Query Model Structure of Loklak Search

Need to restructure

The earlier versions of loklak search applications had the issues of breaking changes whenever any new feature was added in the application. The main reason for these unintended bugs was identified to be the existing query structure. The query structure which was used in the earlier versions of the application only comprised of the single entity a string named as queryString.

export interface Query { queryString: string; }

This simple query string property was good enough for simple string based searches which were the goals of the application in the initial phases, but as the application progressed we realized this simple string based implementation is not going to be feasible for the long term. As there are only a limited things we can do with strings. It becomes extremely difficult to set and reset the portions of the string according to the requirements. This was the main vision for the alternate architecture design scheme was to the ease of enabling and disabling the features on the fly.

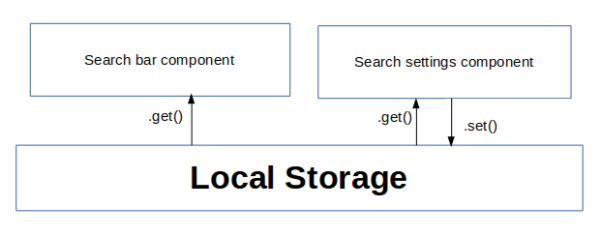

Application Structure

Therefore, to overcome the difficulties faced with the simple string based structure we introduced the concept of an attribute based structure for queries. The attribute based structure is simpler to understand and thus easier to maintain the state of the query in the application.

export interface Query { displayString: string; queryString: string; routerString: string; filter: FilterList; location: string; timeBound: TimeBound; from: boolean; }

The reason this is called an attribute based structure is that here each property of an interface is independent of one another. Thus each one can be thought of as a separate little key placed on the query, but each of these keys are different and are mutually exclusive. What this means is, if I want to write an attribute query, then it does not matter to me which other attributes are already present on the query. The query will eventually be processed and sent to the server and the corresponding valid results if exists will be shown to the user.

Now the question arises how do we modify the values of these attributes? Now before answering this I would like to mention that this interface is actually instantiated in the the Redux state, so now our question automatically gets answered, the modification to redux state corresponding to the query structure will be done by specific reducers meant for modification of each attribute. These reducers are again triggered by corresponding actions.

export const ActionTypes = { VALUE_CHANGE: '[Query] Value Change', FILTER_CHANGE: '[Query] Filter Change', LOCATION_CHANGE: '[Query] Location Change', TIME_BOUND_CHANGE: '[Query] Time Bound Change', };

This ActionTypes object contains the the corresponding actions which are used to trigger the reducers. These actions can be dispatched in response to any user interaction by any of the components, thus modifying a particular state attribute via the reducer.

Converting from object to string

Now for our API endpoint to understand our query we need to send the proper string in API accepted format. For this there is need to convert dynamically from query state to query string, for this we need a simple function which take in query state as an input return the query string as output.

export function parseQueryToQueryString(query: Query): string { let qs: string; qs = query.displayString; if (query.location) { qs += ` near:${query.location); if (query.timeBound.since) { qs += ` since:${parseDateToApiAcceptedFormat(query.timeBound.since)}`; if (query.timeBound.until) { qs += ` until:${parseDateToApiAcceptedFormat(query.timeBound.until)}`; return qs; }

In this function we are just checking and updating the query string according to the various attributes set in the structure, and then returning the query string. So if eventually we have to convert to the string, then what is the advantage of this approach? The main advantage of this approach is that we know the query structure beforehand and we use the structure to build the string not just randomly selecting and removing pieces of information from a string. Whenever we update any of the attribute of the query state, the query is generated fresh, and not modifying the old string.

Conclusion

This approach makes the application to be able to modify the search queries sent to server in a streamlined and logical way, just by using simple data structure. This query model has provided us with a lot of advantages which are visible in the aspect of application stability and performance. This model has cuts out dirty regex matching, of typed queries and thus again help us to make simpler queries.

Resources and Links

- Angular dependency injection Article

- Angular services Tutorial

- Loklak search Repo : GitHub/LoklakSearch

You must be logged in to post a comment.